Learning by Asking Questions

|

People

Ishan Misra Ross Girshick Rob Fergus Martial Hebert Abhinav Gupta Laurens van der Maaten

Moving away from Passive Learning

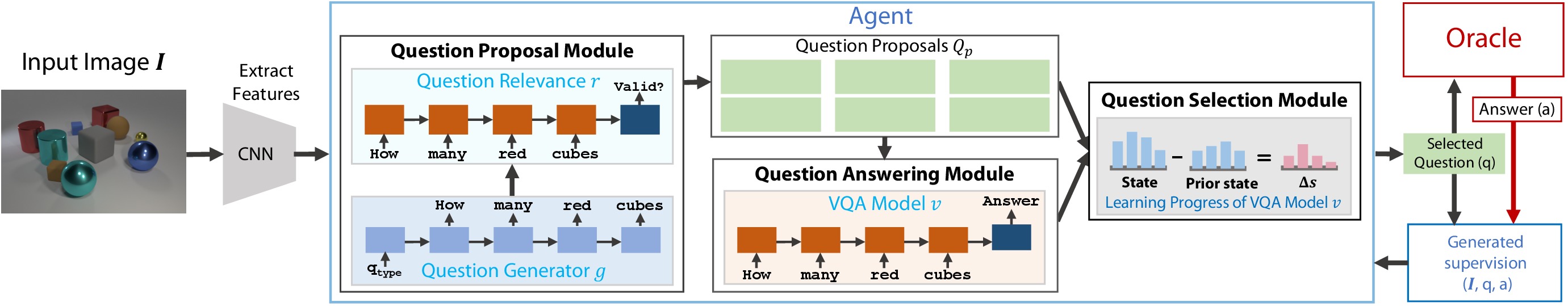

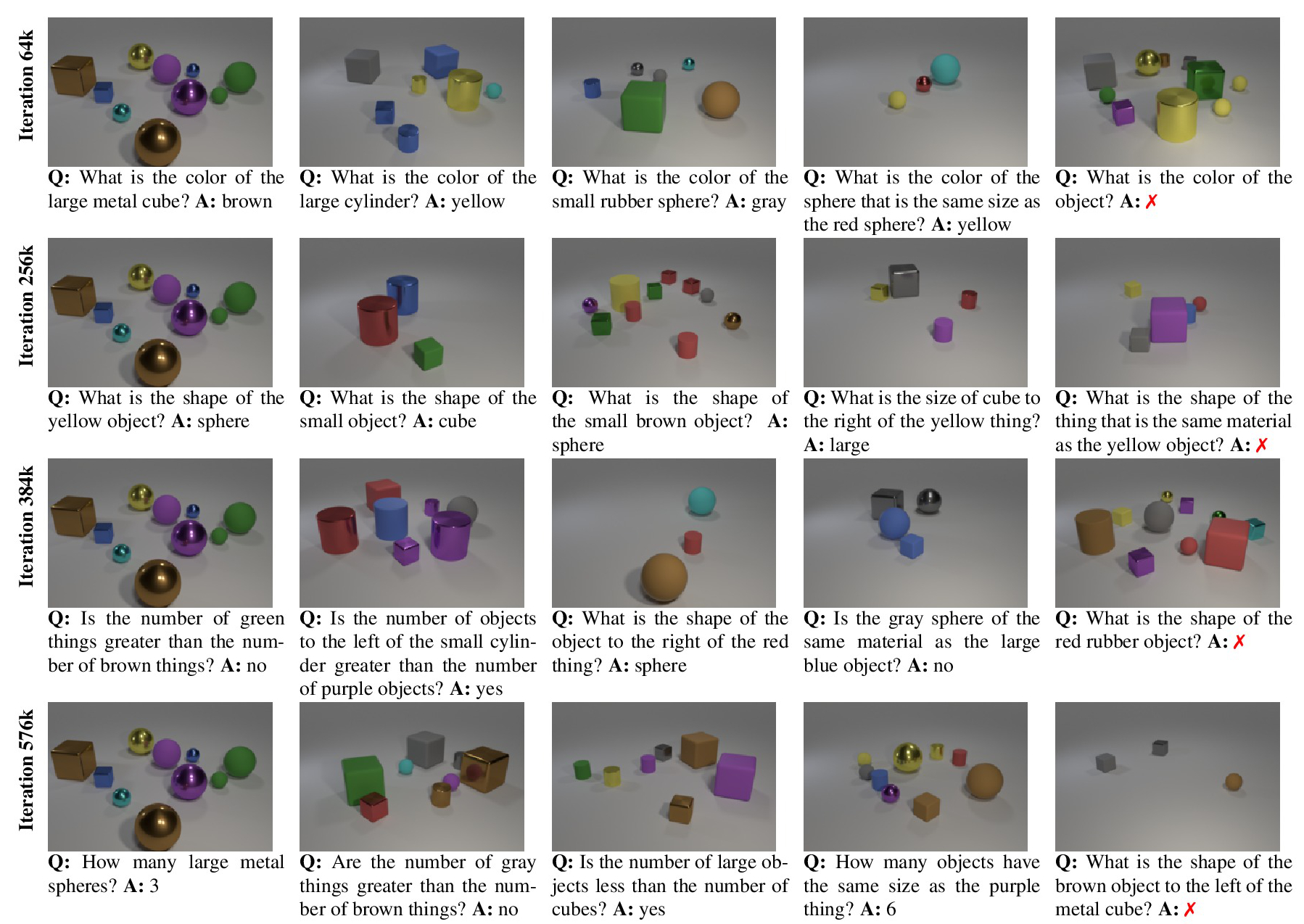

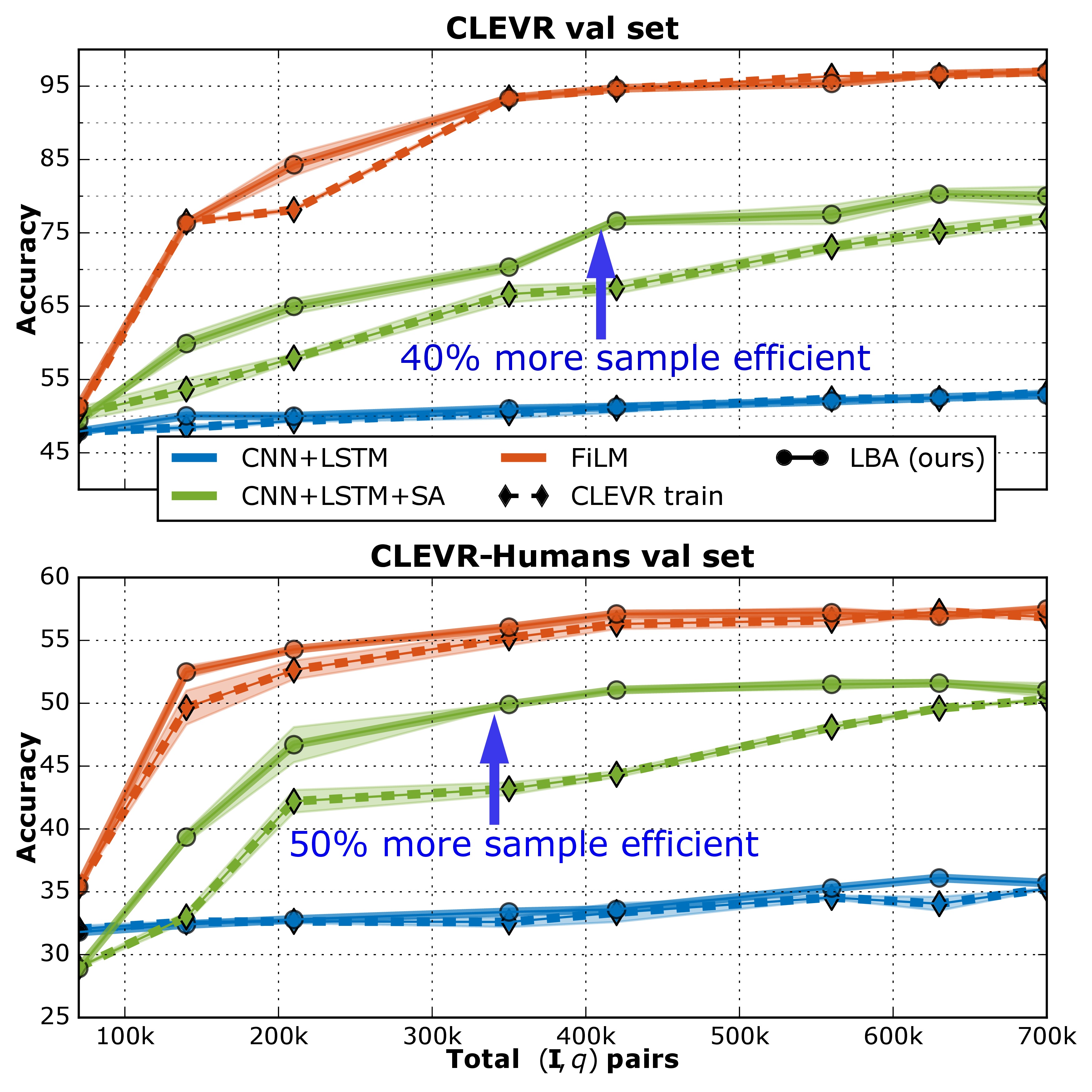

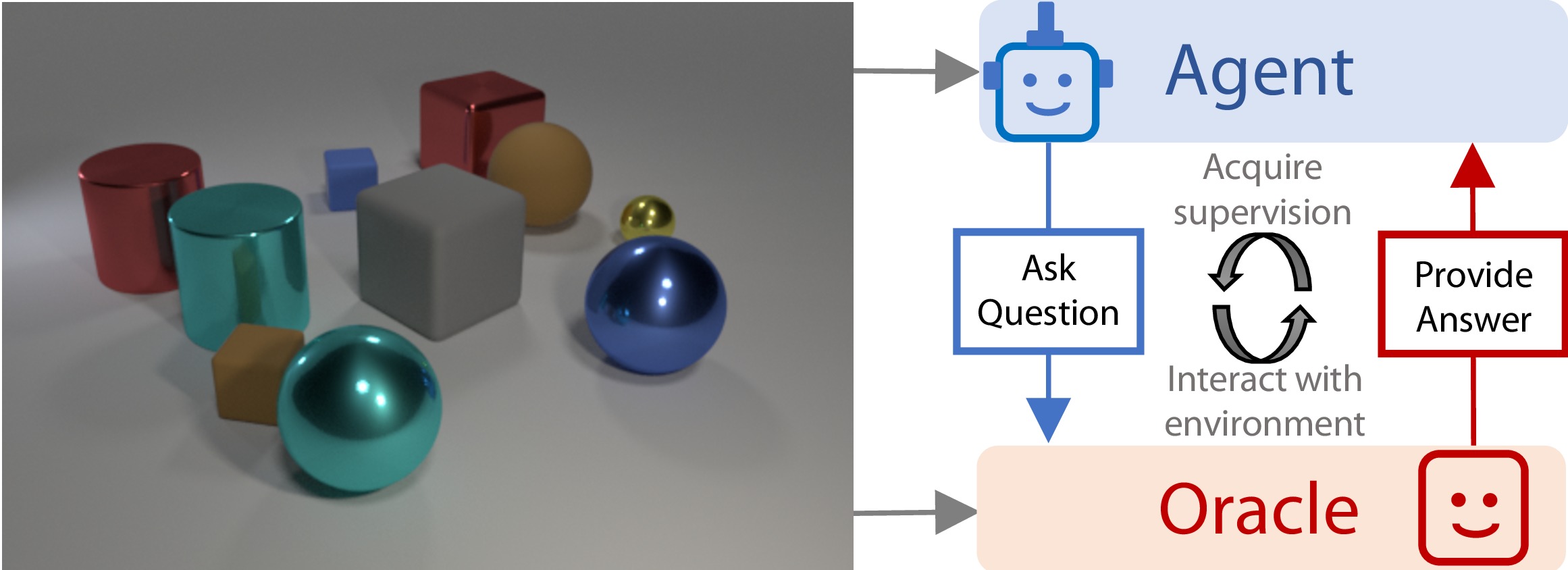

While recognition systems show a lot of promise in the passively supervised setting, it is unclear how to move to interactive agents. In this paper, we propose an interactive Visual Question Answering (VQA) setting in which agents must ask questions about images to learn. As an added bonus, our test time setup is exactly that of VQA which means that we can use well understood metrics for evaluation.

Some Results

Paper

|

Ishan Misra, Ross Girshick, Rob Fergus, Martial Hebert, Abhinav Gupta, Laurens van der Maaten [pdf] [bib] |

Acknowledgement

The authors would like to thank Arthur Szlam, Jason Weston, Saloni Potdar and Abhinav Shrivastava for helpful discussions and feedback on the manuscript; Soumith Chintala and Adam Paszke for their help with PyTorch.